notes

Caching

Hit Ratio

-

Cache Hit Ratio

cacheHitCount / (cacheHitCount + cacheMissCount) -

Relative performance

relativePerformance = 1 / (cacheHitCost * cacheHitRatio + cacheMissCost * (1 - cacheHitRatio)) -

Factors which affect cache hit ratio

- Key Space - how many possible keys do we have

- Key TTL

- Cache Size - how many object can we store

HTTP Cache

- MDN HTTP Caching

-

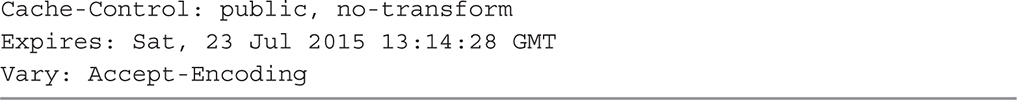

Static files headers

-

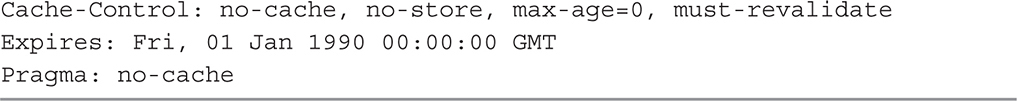

Non cachable objects HTTP headers

-

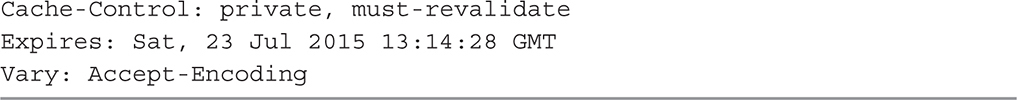

Private files cache headers

- Best Practices

- do not use Cache-Control: max-age and Expires together, because it creates confusing behaviour

- Do not use html caching metadags (like http-equiv=”cache-control”). Again it dublicates cache logic and creates confusing behaviour

Cache Types

- Browsers Cache

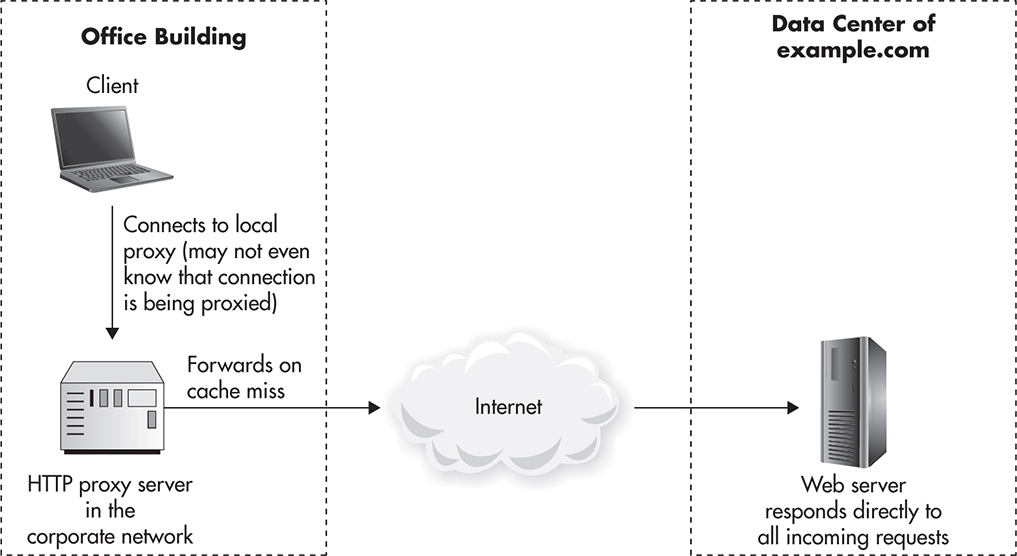

- Caching Proxy - setup up by enterprice companies or ISP.

- this type of proxies loses popularity because of wide spreading of HTTPS (now proxies can not decrypt headers to examine traffic)

- this type of proxies loses popularity because of wide spreading of HTTPS (now proxies can not decrypt headers to examine traffic)

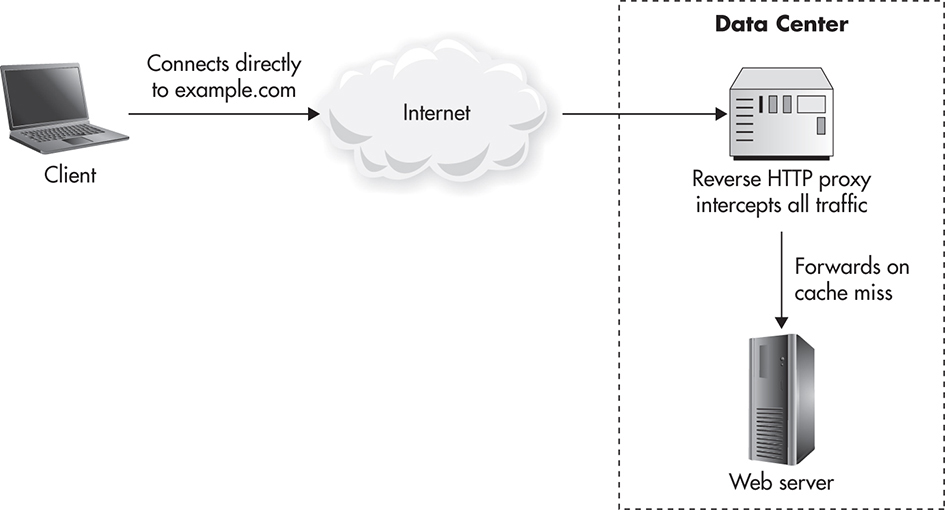

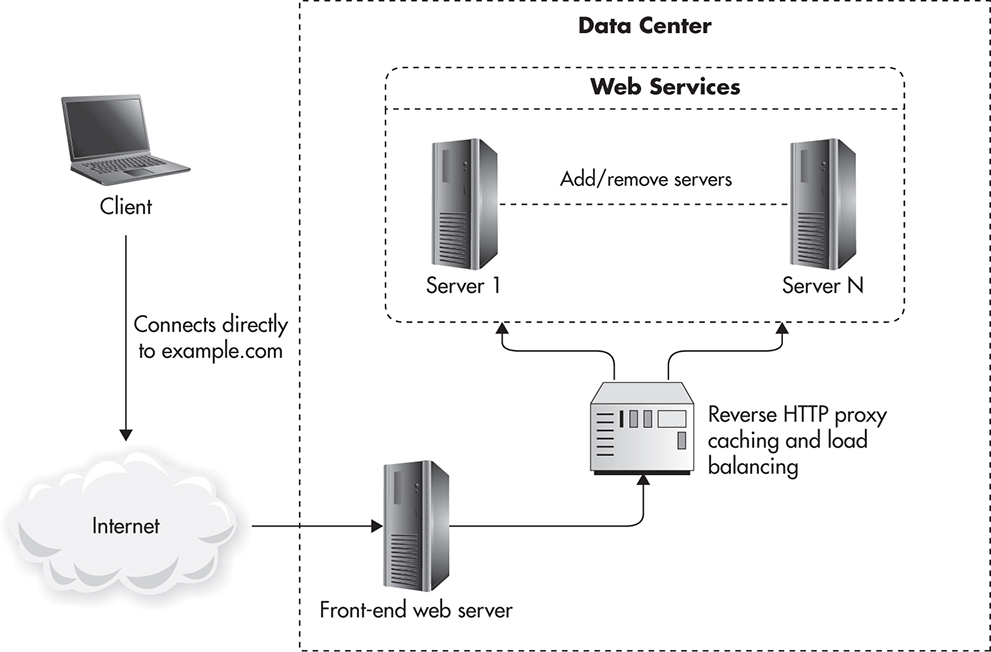

- Reverse Proxy

-

public

-

private

-

-

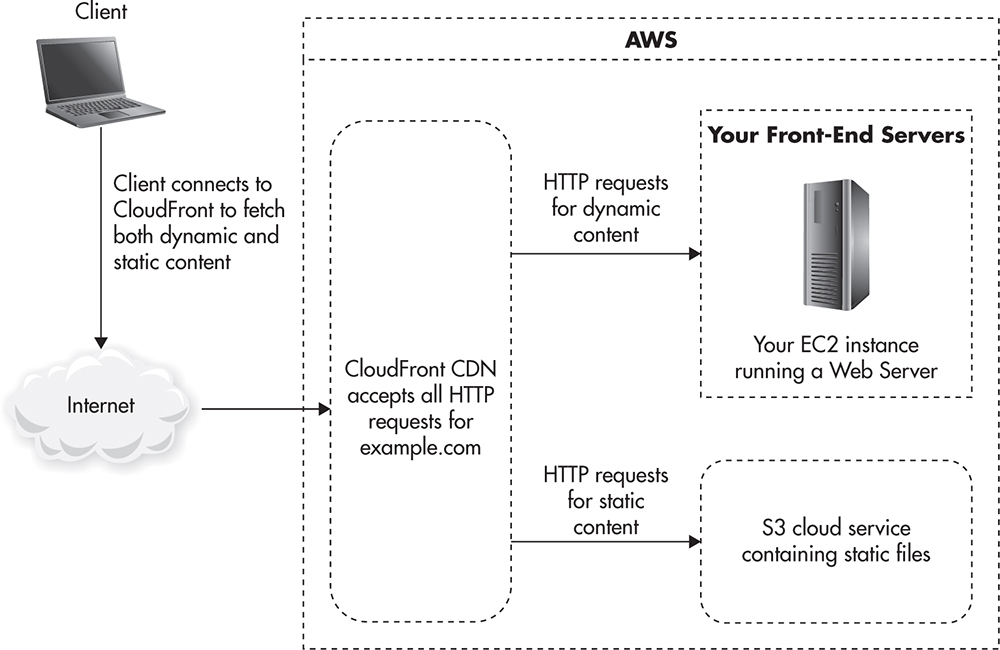

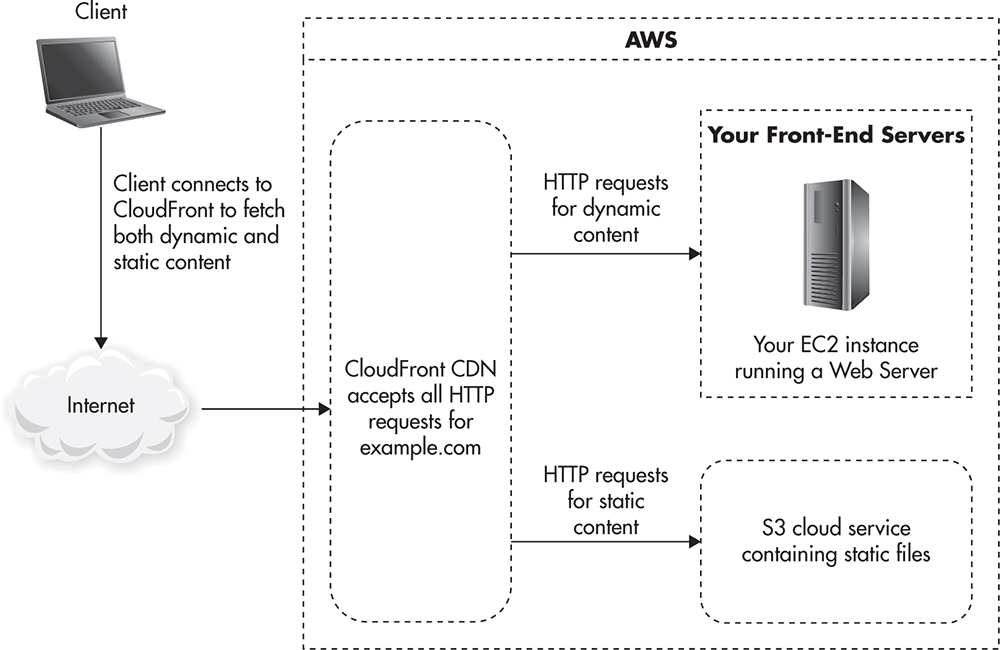

CDN

-

CDN Self Hosted App

-

CDN AWS

-

- Object Cache - the cache of database queries

Scaling Object Cache

- Data partitioning for object cache is quite simple. There are a lot of libraries which allow to accept multiple cache servers.

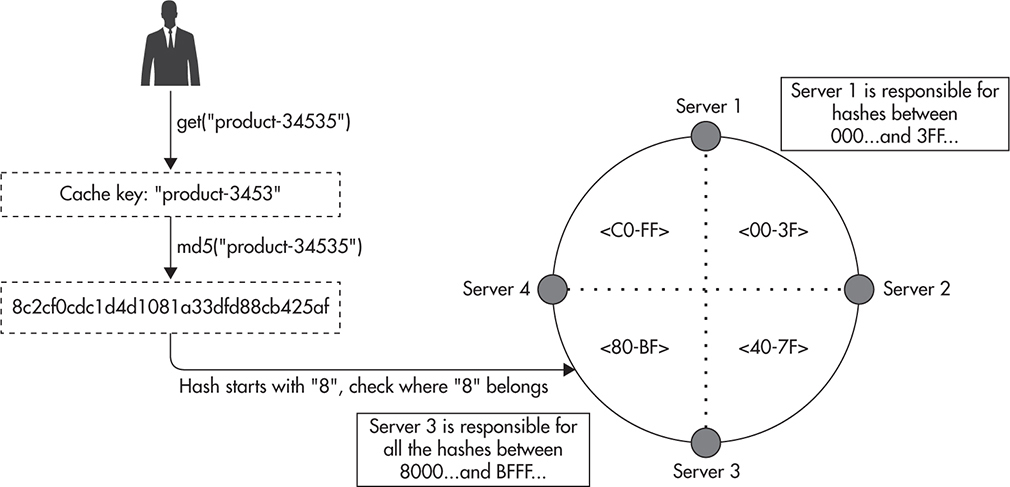

- As a usefull way to partition keys between shard we could implement the following:

- Assign to every key number from range (for example first two chars from md5hash)

-

Every shard is responsible for the part of data which is lockated in shards range:

-

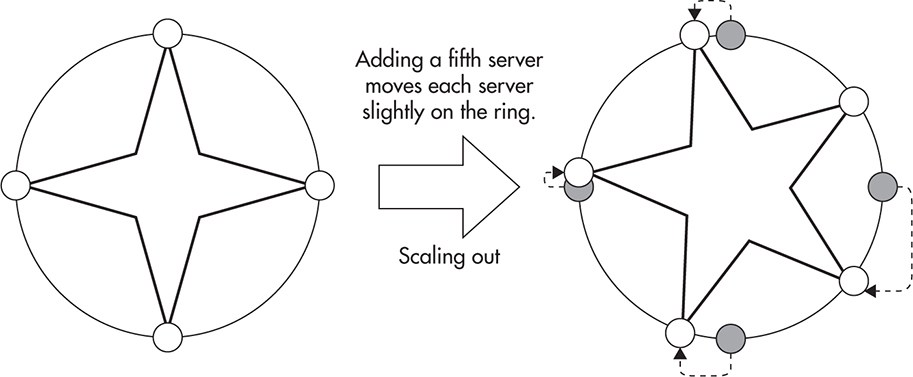

When the new shard is added - adjust key range a little bit

Caching Optimization

- Decrease Key Space

- Increase TTL

- Decrease Object size

-

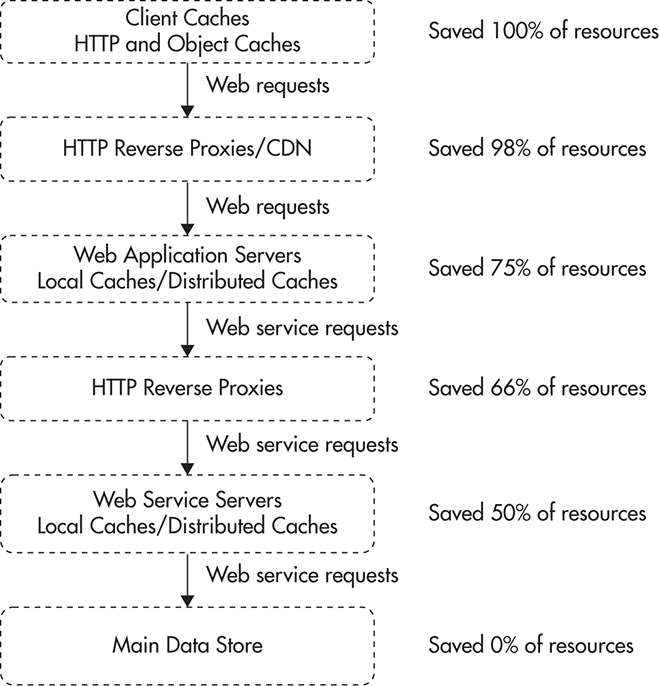

Cache High Up the Call Stack

-

Reuse cache among users - for example we build an app for restraunt recomendataion based on user location.

# Bad URL GET /restaurants/search?lat=-11.11111&lon=22.2222 # Good URL GET /restaurants/search?lat=-11.11&lon=22.22- we lost a little bit of accuracy, but we gathered a lot in case of caching

-

To decide what to cache evaluate Aggregated Time Spent

aggregated time spent = time spent per request * number of requests

Cache Eviction Strategies

- FIFO

- LIFO

- Least Recently Used (LRU)

- Most Recently Used (MRU)

- Least Frequently Used (LFU) - count the number of times cache was used.

- Random Replacement

Caching and Availability

- Cache should be used only for speeding up requests, not for handling load. Because if you system can not handle load without cache you decrease availability:

- Cache servers are not very reliable by it’s nature. So they could failure.

- If you system could not workd without cache you should take into account the following:

- Thunering herd - when one of the popular keys are not in the cache a lot of concurrent requests will go for the same key => and you system could process a huge amount identical requests simultaneously

- Cold Cache - after the restart you need to “heat” the cache because if it’s empty you will get enormous load on the database. To handle after restart:

- lock reads

- run script for putting popular keys into cache